Sree’s newsletter is produced with Zach Peterson (@zachprague). Digimentors Tech Tip from Robert S. Anthony (@newyorkbob). Our sponsorship kit.

🗞 @Sree’s #NYTReadalong: Sunday’s guest was Amitava Kumar, Vassar College professor and author of the new, highly-acclaimed novel “My Beloved Life” and several other books. Watch a recording. You’ll find three years’ worth of archives at this link (we’ve been reading the paper aloud on social for 8+ years now). The Readalong is sponsored by Muck Rack. Interested in sponsorship opportunities? Email sree@digimentors.group and neil@digimentors.group.

🎯 Work with us! Our company, Digimentors, does digital and social consulting, as well as virtual/hybrid events production and all kinds of training. See our updated brochure (would love your feedback). Get in touch (no project too big or too small): sree@digimentors.group and neil@digimentors.group. If you’d rather chat, here’s my Calendly.

🤖 Deep dive into AI! Excited to partner with Josh Goldblum and Futurespaces to present a deep-dive workshop on AI. 🙌

3 hours 😮

Fully remote ✅

Friday, May 3, 1-4 pm ET 🗓

Here's a code for 50% off: SREE50 🎉

Learn more and sign up here. Makes a great Mother’s Day, Father’s Day or graduation gift, too!

***

WE HAVE NO IDEA WHAT WE’RE DOING WITH AI, but we’re clearly going to do it anyways.

Stanford just published the 2024 edition of its annual Artificial Intelligence Index Report, and it’s almost certainly the most comprehensive overview of the current state of AI you’ll find. There are nine chapters available for download individually (free!), and the sheer detail and expanse of the report make it impossible to go through in one sitting.

So, we’re taking up chapter 3, “Responsible AI,” which starts with nine chapter highlights that will make you question why exactly AI is becoming ubiquitous when there is such a plethora of risks and mistakes associated with the technology.

Robust and standardized evaluations for LLM responsibility are seriously lacking

Political deepfakes are easy to generate and difficult to detect

Researchers discover more complex vulnerabilities in LLMs

Risks from AI are a concern for businesses across the globe

LLMs can output copyrighted material

AI developers score low on transparency, with consequences for research

Extreme AI risks are difficult to analyze

The number of AI incidents continues to rise

ChatGPT is politically biased

Any thinking person can be forgiven for uttering a cynical, “oh… just those risks?” after reading through this list. Read aloud in a monotone voice, it sounds like the last 30 seconds of a prescription drug ad that tells you that the pill will lower your blood pressure, but you will probably get dysentery as a side effect.

The whole report is basically a couple hundred pages of concerns, questions, cautionary tales, and…more questions. It’s incredible how little we know about how AI even works—it’s maybe more shocking how that extends to the people who are working on the technology right now.

We simply don’t know.

Last September, I wrote about how technology companies have been given license to unleash their creations on society and then just sort of iterate from there. Thrusting a slightly better toaster on the world is one thing, fundamentally changing the way we communicate and consume information is quite another. In the last 15 years, social media and messaging apps have completely upended our very nature, and I am left wondering if we humans are really cut out to consume so much information so often and be expected to just accept that as normal and keep going.

Even after the printing press revolution, information consumption and communication over distance still took time—real time. Now, there is no time. There’s no time to cool off. There’s no time to read up on something. There’s no time to consider our requests, our responses to requests of us, our thoughts about 1,000 complicated issues with broad implications on topics outside our expertise.

From my essay, The Public as a Laboratory:

There was no way of knowing what Facebook’s explosion would mean for social media and what it would spawn, but it also quite apparent quite quickly that things were going to get very big very fast. On the regulatory side, that was already too late, and I suspect the same may be true for AI.

Almost anyone in the world can use any number of generative AI tools for any number of tasks right know. The people who built these models have no idea what the outputs will be until they happen. This experiment is being conducted on all of us right now, knowingly in some cases, and very much unknowingly in countless others - job hunts, loan applications, and financial markets come to mind right away.

This was all just released to the entire world. There is no benevolence here, it’s a for-profit venture, and the profits have been quite handsome to date.

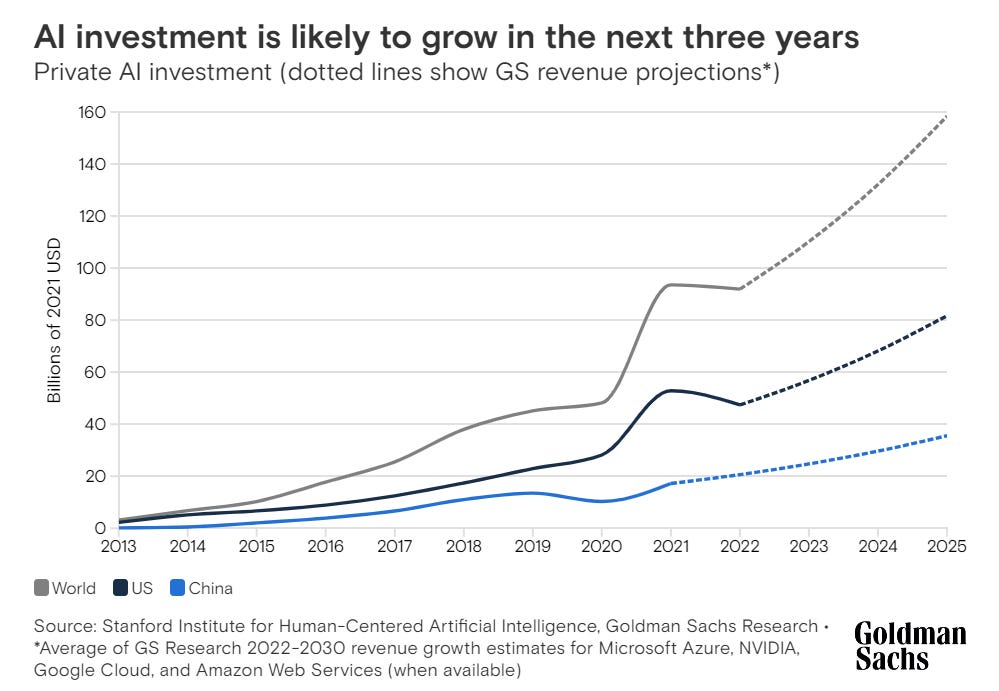

The effects of AI on the way the average person lives an average life will be profound in the medium and long terms, of which there is little debate. Investment in private AI firms will be $200 billion by next year, according to Goldman Sachs, and that will likely grow exponentially over the next decade at least.

In that same Goldman Sachs piece, the firm surveyed CEOs and most agreed that the second half of this decade is when AI will be more mature as a technology, and as such much more prevalent.

Unlike social media and messaging, AI will not be so overt and in-our-face(s). It will be in the background, making real decisions that affect real people. I worry less about “killer AI blowing up humans and ushering in the robot era” than I do about the piling up of non-human decision making that we, at the moment, seem unlikely to do much about. Facebook, Instagram and the platform formerly known as Twitter were and continue to be right in front of us—we make the choice to open those apps.

With AI, there will be a lot of generative uses, but the vast majority of AI use cases lie in the background. When you apply for a mortgage you sign everything, turn in your documents, and you wait. You wait for a few people and a lot of computing power to decide if you’re a worthy borrower—it’s a black box now, and it’s one that will turn into a proper black hole when AI really drives those processes.

The Stanford report drives this concern home. There are too many paragraphs like this to pull-quote in one newsletter:

Unlike general capability evaluations, there is no universally accepted set of responsible AI benchmarks used by leading model developers. TruthfulQA, at most, is used by three out of the five selected developers. Other notable responsible AI benchmarks like RealToxicityPrompts, ToxiGen, BOLD, and BBQ are each utilized by at most two of the five profiled developers. Furthermore, one out of the five developers did not report any responsible AI benchmarks, though all developers mentioned conducting additional, nonstandardized internal capability and safety tests.

The inconsistency in reported benchmarks complicates the comparison of models, particularly in the domain of responsible AI.

There are a lot of things to measure, but there is no standard for measuring much of anything that AI does, generative or otherwise. I know a lot of smart people who get a bit of brain melt when talking about AI for too long, something that does not give me hope that our political class as currently constructed will be up to the challenge of regulating much of anything. We have no real regulatory framework for AI—nothing about privacy, nothing about transparency, nothing about trustworthiness… nothing.

Read chapter 3 of the Stanford AI Index and see for yourself.

Maybe it’s interesting that the one thing we have in common with AI is that neither of us are prepared for the relationship we’re being forced into. Maybe it gets less interesting when considering the consequences.

— Sree

Twitter | Instagram | LinkedIn | YouTube | Threads

Sponsor message

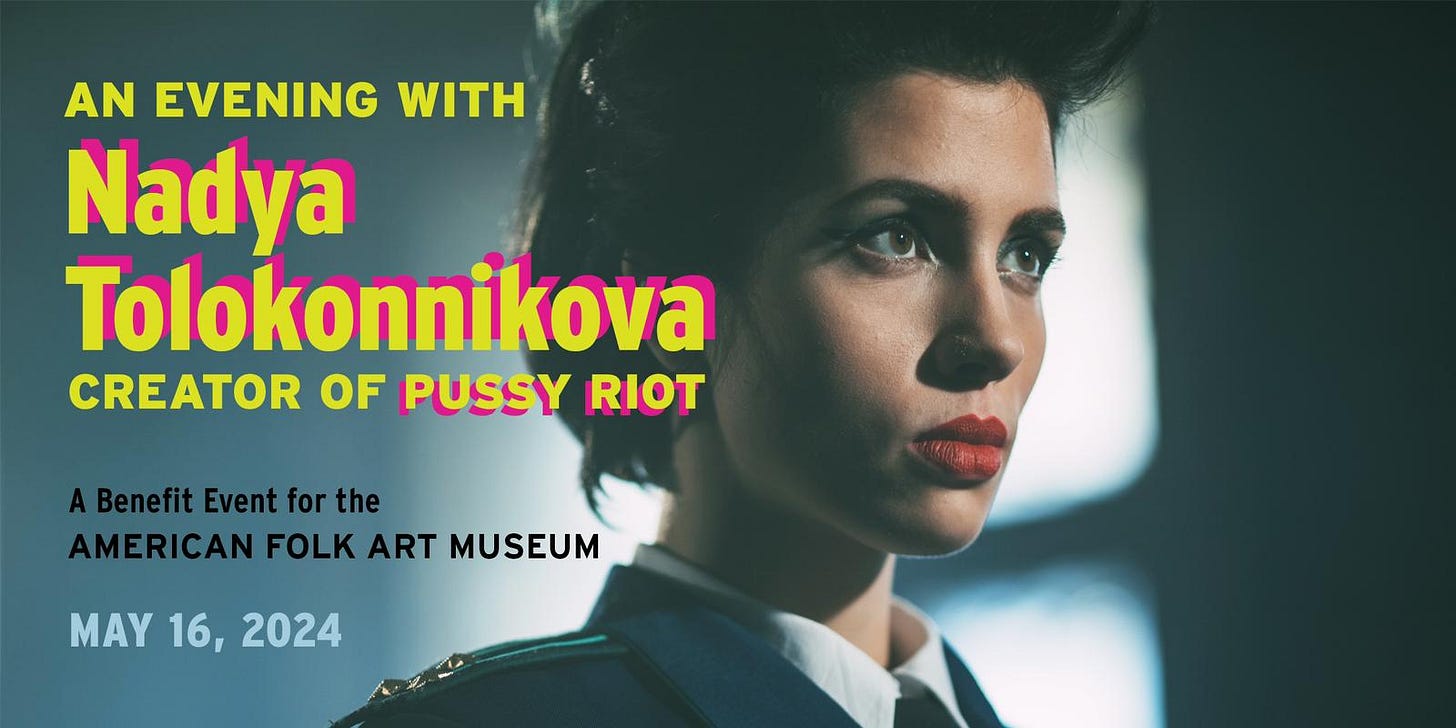

Nadya Tolonnikova Headlines American Folk Art Museum Benefit Event

This special event is planned for Thursday, May 16, 2024, at the Society for Ethical Culture at 2 W 64th St, New York, NY 10023. The event will showcase Tolokonnikova’s recent artworks in a pop-up exhibition, and feature what is sure to be a captivating Q&A session about her practice followed by a musical performance. Tickets will support the American Folk Art Museum's mission to support and champion the work of self-taught and folk artists. Learn more here.

DIGIMENTORS TECH TIP | Google’s New Travel Features Mix Artificial Intelligence with Yours

By Robert S. Anthony

Each week, veteran tech journalist Bob Anthony shares a tech tip you don’t want to miss. Follow him @newyorkbob.

Never mind if you found frost on your windowsill today; the summer travel season is just around the corner. With that in mind, Google recently tweaked its search and map services to improve users’ travel-planning experiences and add human- and AI-generated advice.

The point, according to Google representatives at a recent New York press event, is to create personalized guides and tips that Google Search and Maps users can take advantage of to help them unearth more than they might from generic travel guides.

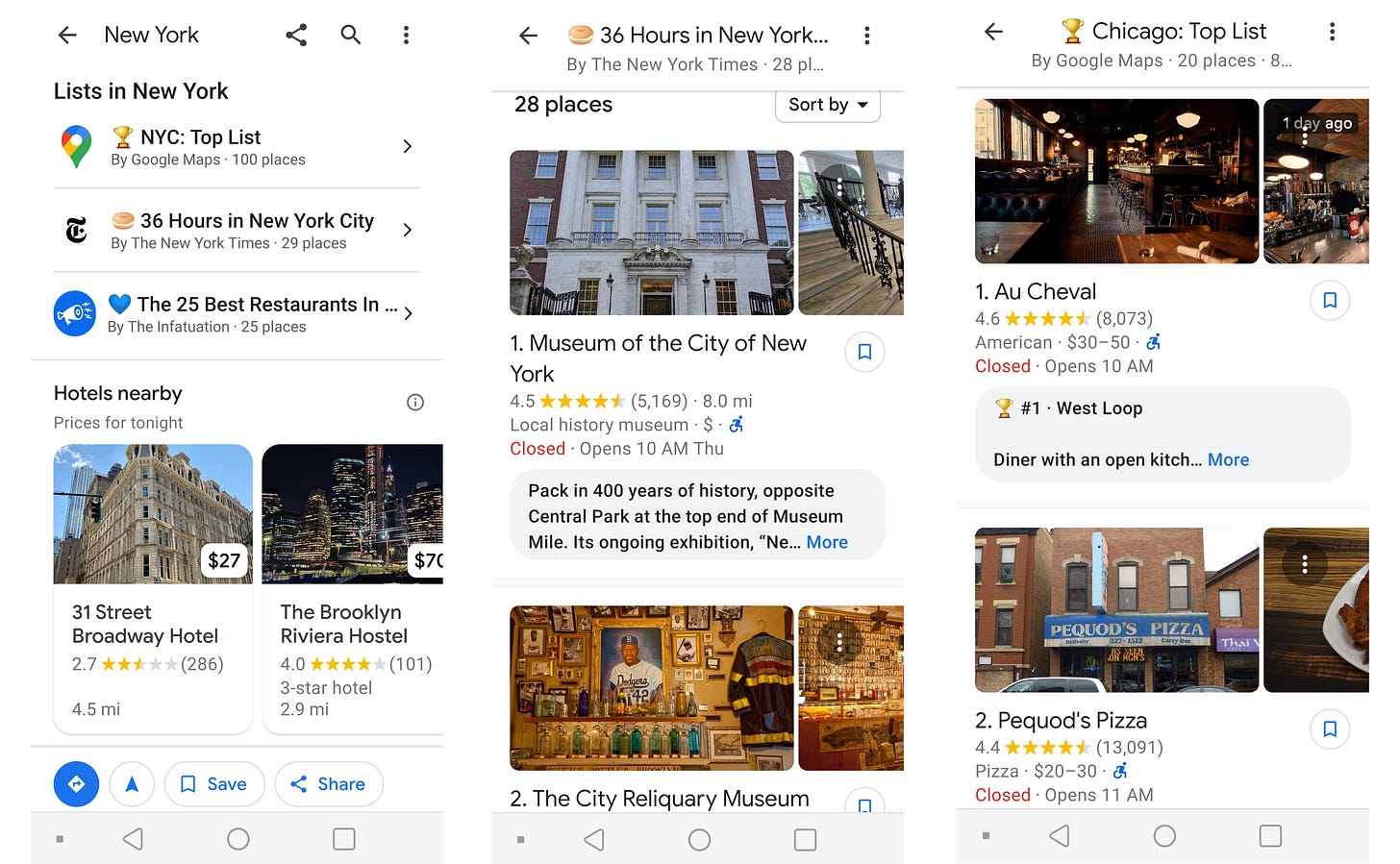

For example, the Google Maps Android app experience has been enhanced with lists that appear when users search for certain popular destinations. A search for “New York” not only returns basic city information and a map, but swiping up may uncover lists like “Trending,” which shows the local spots that have been heavily searched recently, or “Gems,” which tries to uncover under-the-radar tourist options.

Some lists, like “NYC: Top List” are created by Google, while others are curated by its partners, like “36 Hours in New York City,” by the New York Times. The new lists were rolled out initially for 40 cities in the US and Canada, but more will be added, according to Andrew Duch, director of product for Google Maps.

Swiping up on the mobile Android Maps app also gives access to a Reviews section where independent Local Guides offer their own tips and opinions on tourist destinations. While most guides provide useful information, not everything is accurate.

For example, one Local Guide wrote that The Cloisters is a “cool place about 40-70 min north of NYC” and noted that the popular medieval art and architecture museum is reachable by subway. The Cloisters is in upper Manhattan, well within the confines of New York City, and the subway system doesn’t extend outside of the city anyway.

Google’s “Circle to Search” feature, available only on recent Google Pixel phones and the Samsung Galaxy S24, can be useful when traveling where users don’t know the local language or can’t identify an object. Circle to Search lets users circle or highlight part of whatever is on the screen and get assistance via artificial intelligence.

A diner could highlight the text of a foreign-language menu on the phone screen and get a quick translation without switching to another app like Google Translate or use Circle to Search to identify an image of a dish in the menu or a statue outside the restaurant.

The new travel features are the first of many, according to Google. “We really see this as a starting point,” said Craig Ewer, a Google communications manager.

Did we miss anything? Make a mistake? Do you have an idea for anything we’re up to? Let’s collaborate! sree@sree.net and please connect w/ me: Twitter | Instagram | LinkedIn | YouTube / Threads

Thank you for your thoughts. I'm finding it interesting that many people who know what they're talking about, like Molly White, are as of now of the opinion that AI isn't doing much for most people. She may be right, but I think that once people learn to use AI, there will be a lot of good outcomes, and I hope we will be able to focus on them through tsunami of bad outcomes.