Don't Call Them AI "Hallucinations!"

Mistakes are mistakes, and some can be quite consequential.

Fiction: Multiple AI mistakes about Sree in the image and text above: including making him Bill Clinton’s chief speechwriter and executive editor of the NYT! (slide from his AI workshops)

Facts: Sree’s newsletter is produced with Zach Peterson (@zachprague). Digimentors Tech Tip from Robert S. Anthony (@newyorkbob). Our sponsorship kit.

🗞 @Sree’s #NYTReadalong: Sunday’s guest was Arlene Schulman, writer and photographer, who was featured in the NYT for her amazing rent-controlled apartment. Watch a recording. You’ll find three years’ worth of archives at this link (we’ve been reading the paper aloud on social for 8+ years now!). The Readalong is sponsored by Muck Rack. Interested in sponsorship opportunities? Email sree@digimentors.group and neil@digimentors.group.

🎯 Work with us! Our company, Digimentors, does digital and social consulting, as well as virtual/hybrid events production and all kinds of training. See our updated brochure (would love your feedback). Get in touch (no project too big or too small): sree@digimentors.group and neil@digimentors.group. If you’d rather chat, here’s my Calendly.

🤖 I’m now offering workshops about AI, ChatGPT, etc. They’re 20 minutes to 3 hours long, remote or in-person. No audience is too big or too small. If you know of any opportunities to present these (customized for businesses, nonprofits, schools, etc), LMK: sree@digimentors.group. Here’s the brochure to my non-scary guide to AI: http://bit.ly/sreeai2024

***

“They’re not hallucinations! They are mistakes, errors, big f***ing deals!” — me, to anyone who will listen.

***

WE CAN’T GET ENOUGH OF A GOOD EUPHEMISM. If you’ve even brushed up against political journalism in the last century, euphemisms will be old hat—but we just can’t seem to quit ourselves.

There’s an extensive body of work on the topic, and generative AI has bestowed upon us what may be the biggest laugher of them all—calling outright mistakes “hallucinations.”

I don’t think you can evangelize a technology like the tech world evangelizes AI and get away with calling mistakes “hallucinations.” It’s literal double-speak, and we’re eating it up.

So this is my campaign to stop calling them hallucinations. Let’s not let AI off the hook by using that term.

Here’s IBM’s scientific-enough outline of what AI hallucinations are, why they happen (or, better, why IBM thinks they happen), and why it’s the perfect example of the Bureaucratic Style as detailed by Colin Dickey in 2017.

This is how the IBM document opens:

AI hallucination is a phenomenon wherein a large language model (LLM)—often a generative AI chatbot or computer vision tool—perceives patterns or objects that are nonexistent or imperceptible to human observers, creating outputs that are nonsensical or altogether inaccurate.

Generally, if a user makes a request of a generative AI tool, they desire an output that appropriately addresses the prompt (i.e., a correct answer to a question). However, sometimes AI algorithms produce outputs that are not based on training data, are incorrectly decoded by the transformer or do not follow any identifiable pattern. In other words, it “hallucinates” the response.

Generally, if I buy the ingredients for a lovely bolognese, I desire the output of a lovely bolognese. If my output is chicken parm, it’s weird, and it would be tough to describe why or how I made chicken parm. The difference here is, of course, that my completely illogical, almost impossible mistake has no real-world consequences beyond my own disappointment and confusion. It does not result in a medical misdiagnosis. It does not result in a wrongfully-rejected mortgage application. It does not paint a family home as a target for airstrikes. It does not spin up a quick news item about Hillary Clinton’s secret life as a lizard in a local pizzeria.

There is the potential for some huge misses as a result of AI error, and I also wonder about the consequences at the micro level that will be overlooked as macro trends shift. People are getting audited because some AI tax bot makes a mistake. A restaurant is overpaying for produce because an AI pricing program went haywire. It’s things like this that have a real, tangible, visible effect on day-to-day lives, and I can only imagine how many people have already been wrongfully excluded, penalized, or worse, because of some black-box algorithm somewhere.

To IBM’s credit, it is very upfront about the consequences of off-the-rails AI:

AI hallucination can have significant consequences for real-world applications. For example, a healthcare AI model might incorrectly identify a benign skin lesion as malignant, leading to unnecessary medical interventions. AI hallucination problems can also contribute to the spread of misinformation. If, for instance, hallucinating news bots respond to queries about a developing emergency with information that hasn’t been fact-checked, it can quickly spread falsehoods that undermine mitigation efforts. One significant source of hallucination in machine learning algorithms is input bias. If an AI model is trained on a dataset comprising biased or unrepresentative data, it may hallucinate patterns or features that reflect these biases.

This strikes me as huge what if for a technology that is becoming latent across society. It’s an especially significant what if when you consider that the U.S. government is unlikely to regulate AI in any meaningful way any time soon. The Biden Administration’s Executive Order on AI is a start, of course, but it’s not a law, has no real enforcement mechanisms, and will do little in the way of safeguarding or protecting much of anything. The U.S. Congress continues to prove itself as ineffectual as ever, and it’s hard to see a comprehensive, meaningful law move through the D.C. sausage machine any time soon. If the fervor over a potential TikTok ban (or forced sale) has shown us, there’s little in the way of intellectual rigor flowing through the halls of Congress.

This is why it’s so important for us to use precise language when talking about major upheavals, and succumbing to the Bureaucratic Voice when discussing AI will not do us any favors. AI models do not “hallucinate.” They make mistakes, sometimes very significant ones, and there’s no way we know of to prevent it.

It’s also important to understand that the mistakes AI is making right now, and will make in the future, are fundamental to the technology’s “machine-hood.” This is, to me, a good thing. I love using generative AI, and I use it and speak about it often. The thing I keep coming back to is that it generally, in most cases, gives me something close to what I ask for. Expecting that sort of imperfection built into a command-and-response sort of system gives me some hope that doomsday scenarios of rogue AIs are not necessarily far-fetched, but it are far off.

It’s all about the training data—the training data that is drying up quite quickly. Estimates vary, but some people in that world say that available training data will become severely limited within the next few years. The availability of good data combined with a given AI system’s capacity to hold and process that data puts de facto limits on AI as currently constructed. Here’s a great write-up from Lauren Leffer for Scientific American about the inevitability of AI hallucinations.

From the piece:

As more people and businesses rely on chatbots for factual information, their tendency to make things up becomes even more apparent and disruptive.

But today’s LLMs were never designed to be purely accurate. They were created to create—to generate—says Subbarao Kambhampati, a computer science professor who researches artificial intelligence at Arizona State University. “The reality is: there’s no way to guarantee the factuality of what is generated,” he explains, adding that all computer-generated “creativity is hallucination, to some extent.”

Last week, I attended a lunch-n-learn about AI hosted by NYU Journalism School’s Ethics & Journalism Initiative. Zach Seward, NYT’s editorial director of AI initiatives, shared great insights about how news organizations are using AI (because he’s so new at the NYT, his examples were mainly from elsewhere; his slides are here). I asked Zach about AI hallucinations, and he arrived at the word “euphemism” as a descriptor almost immediately in his response.

So let’s remove hallucinations from our news coverage, our offices, our classrooms. The AI folks certainly won’t.

— Sree

Twitter | Instagram | LinkedIn | YouTube | Threads

Sponsor message

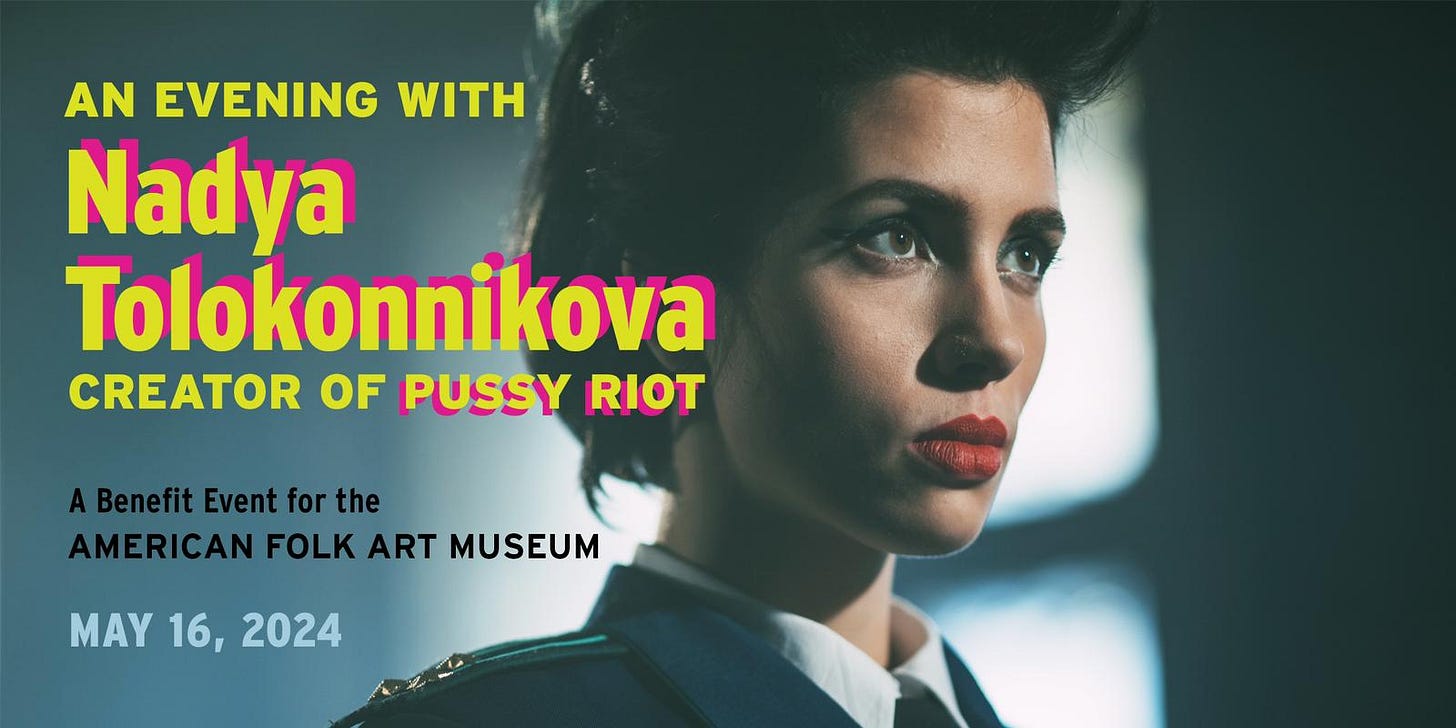

Nadya Tolonnikova Headlines American Folk Art Museum Benefit Event

This special event is planned for Thursday, May 16, 2024, at the Society for Ethical Culture at 2 W 64th St, New York, NY 10023. The event will showcase Tolokonnikova’s recent artworks in a pop-up exhibition, and feature what is sure to be a captivating Q&A session about her practice followed by a musical performance. Tickets will support the American Folk Art Museum's mission to support and champion the work of self-taught and folk artists. Learn more here.

DIGIMENTORS TECH TIP | Asus Zenfone 11 Ultra: Flagship Smartphone Challenges Big Dogs

By Robert S. Anthony

Each week, veteran tech journalist Bob Anthony shares a tech tip you don’t want to miss. Follow him @newyorkbob.

It doesn’t get the media attention of Apple or Samsung, but global computer giant Asus has been making quality cell phones for decades. Its new Zenfone 11 Ultra shows that the company doesn’t plan on being left behind.

With its $899.99 price tag, 6.78-inch AMOLED display and 256 GB of storage, the Asus Zenfone 11 Ultra sits between more expensive flagship smartphones like Apple’s iPhone 15 Plus ($999 with 6.7-inch display and 256 GB of storage) or Samsung’s Galaxy S24+ ($999.99 with 6.7-inch screen and 256GB of storage) and less-expensive and less-powered “budget phones.”

While it’s known for its laptops, PCs, motherboards and other computer components, Asus was already making flip phones in 2003. Its Zenfone series of Android smartphones debuted in 2014.

The new unit, essentially a recrafted version of the ROG Phone 8, a Republic of Gamers-branded smartphone optimized for gaming, is a marked departure from the Zenfone 10 which, with its relatively diminutive 5.9-inch display, was promoted as an easily pocketable alternative to large, bulky smartphones.

The Asus Zenfone 11 Ultra doesn’t scrimp on muscle: It uses the same powerful Qualcomm Snapdragon 8 Gen 3 processor used in many top-shelf smartphones. The built-in 12 GB of RAM provides more than enough data-processing memory for the most sophisticated apps and its 256 GB of internal storage is more than ample for thousands of photos and long videos, albeit without the ability to expand storage with a removable memory card.

Its power-efficient AMOLED display can adjust its refresh rate from 1 to 120 Hz. It won’t refresh the screen often if you’re viewing, for example, static text, thus saving power, but will increase the rate as necessary if you’re viewing a video or a robust web page. When gaming, the screen can be boosted to 144 Hz, thus allowing gamers to keep up with their online competition.

Its rear camera array includes a 50MP stabilized main rear camera with 2x zoom, a 32MP telephoto camera with 3x zoom and a 13MP ultrawide camera. On the front is a 32MP selfie camera with a 90-degree field of view. The unit also has a fast-charging 5,500 mAh battery which supports 65-watt wired charging and 15-watt wireless charging.

Like other flagship smartphones, the Zenfone 11 Ultra weaves artificial intelligence into its imaging and audio systems. An AI-assisted noise-cancellation feature identifies and suppresses extraneous noises, thus making voice calls clearer, according to Asus, while an AI-powered instant translator lets the user speak in his native language and have it come out translated on the other end. A HyperClarity feature uses AI to enhance and reconstruct detail in zoomed images, thus improving clarity, according to Asus.

Thanks to its status as the world’s fifth-largest personal computer maker behind Lenovo, HP, Dell and Apple, Asus doesn’t need to depend on low pricing to carve out its small (0.16 percent, according to Statcounter), but significant slice of the global smartphone market. The Asus Zenfone 11 Ultra is available in blue, black, gray or sand and is on sale now.

Did we miss anything? Make a mistake? Do you have an idea for anything we’re up to? Let’s collaborate! sree@sree.net and please connect w/ me: Twitter | Instagram | LinkedIn | YouTube / Threads

Good points, Sree. And saying that AI tools have "hallucinations" is another example of how we humans anthropomorphize machines.

I have found these “hallucinations” to be deliberate in the case of one AI tool which creates Art and Images where the tool always misspells some word in the result. The explanation given is totally non-sensical