Social Media is the New Cigarettes

U.S. Surgeon General Vivek Murthy's warnings aren't just for kids.

My then-12yo twins, Durga and Krishna, at the 2015 India Abroad Person of the Year ceremony with Surgeon General Vivek Murthy and former Miss USA Nina Davuluri.

Sree’s newsletter is produced with Zach Peterson (@zachprague). Digimentors Tech Tip from Robert S. Anthony (@newyorkbob). Our sponsorship kit.

🗞 @Sree’s #NYTReadalong: Recent guests include Ali Velshi of MSNBC, Steve Herman of VOA and Justin Nobel, author of “Petroleum-238: Big Oil's Dangerous Secret and the Grassroots Fight to Stop It.” Here’s the recording of Justin’s episode; here’s Ali’s; here’s Steve’s. You’ll find three years’ worth of archives at this link (we’ve been reading newspapers aloud on social for 8+ years now). The Readalong is sponsored by Muck Rack. Interested in sponsorship opportunities? Email sree@digimentors.group and neil@digimentors.group.

🤖 Am teaching my “Non-Scary Guide to AI” workshops in multiple cities this summer — Istanbul, London, Delhi, Bhopal, Chennai, Houston, Chicago — and via Zoom. LMK if you’d like to create your own customized workshop. Or if you need help with AI strategy, policy or scenario planning. Here’s the brochure: http://bit.ly/sreeai2024.

***

THE REGULATORS ARE COMING FOR SOCIAL MEDIA and that’s a good thing. I’ve been telling parents the following for years: “Every set of six months you can delay a young teen from joining platforms like TikTok, Instagram, Snapchat, etc, you’re saving them years of mental health issues down the road.”

But, “Should the government regulate social media” is a vague question that usually elicits a quick “yes” from anyone asked. On the question of “how?” we seem to be all over the map.

The calls for regulation are by-and-large coming from a place of protecting the mental health of young people, particularly young girls. The available research on social media’s relationship to declining mental health is not as ironclad as advertised, but I’m not sure that’s necessarily the issue here. Sure, American teenagers spend an average of five hours per day on social media—most of that on YouTube, TikTok and Instagram. Yes, the majority of high-frequency social media users report low levels of parental monitoring and weak parental relationships. And, of course these kids are more vulnerable to depression and poor mental health than their peers. Even if all of these numbers weren’t true, I think it’s not really debatable that heavy social media use affects people—sometimes very strongly and adversely—and it’s not like social media is going anywhere.

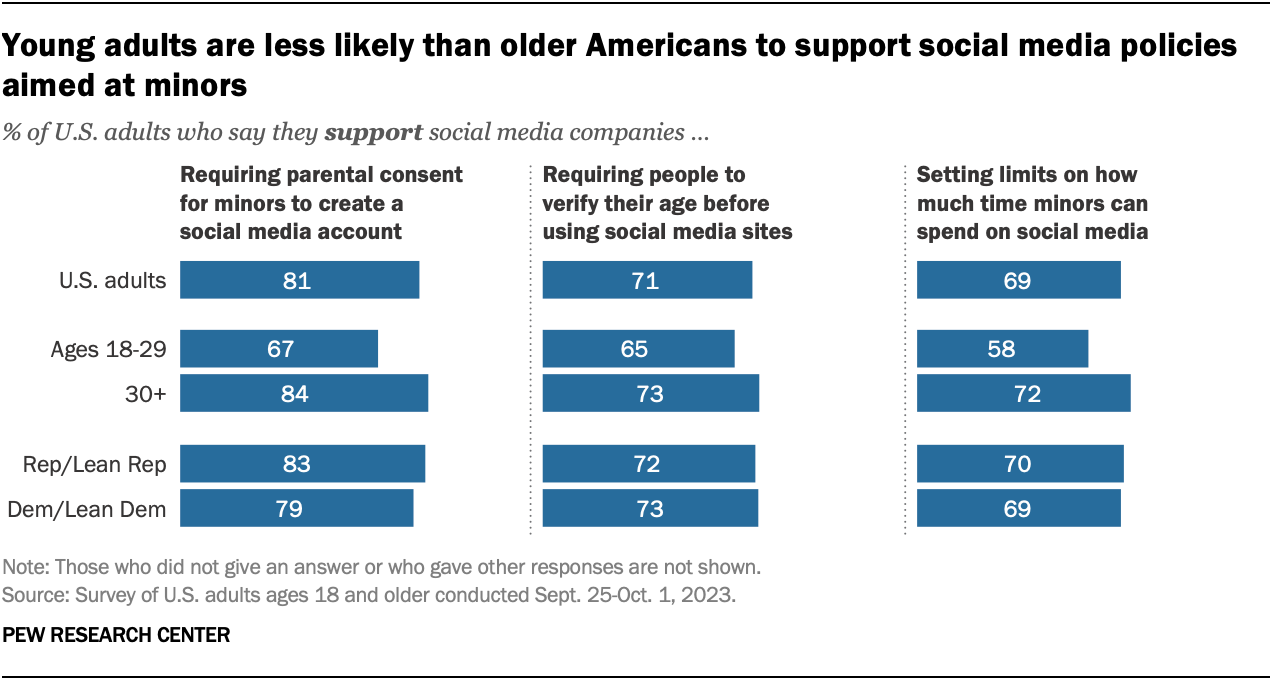

This may come as a shock, but parents are more amenable to stricter rules around social media than young people are, but the gap is not as severe as you may think. From Pew Research Center:

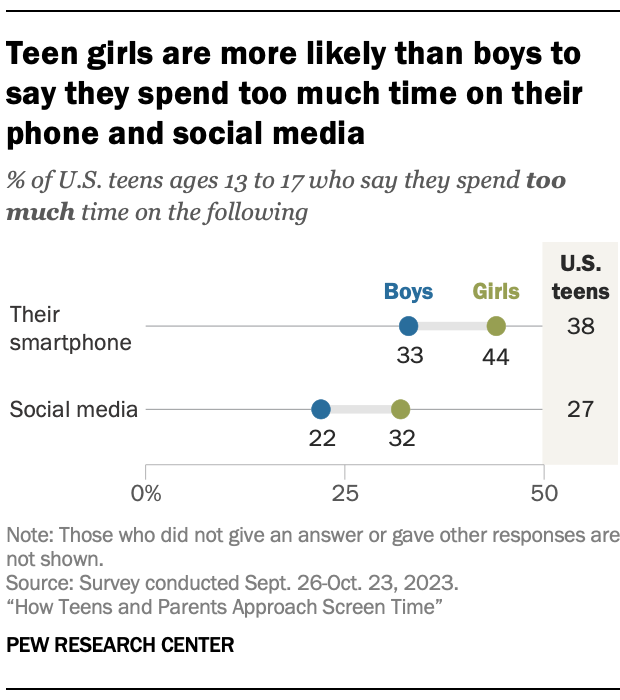

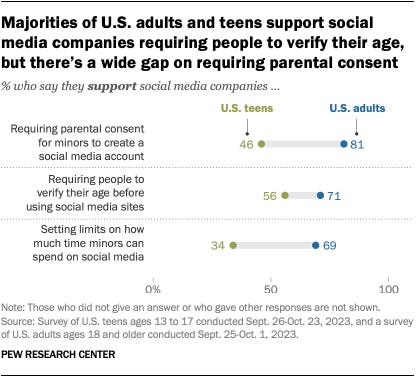

The numbers for younger folks here are telling, though. By any measure, people aged 18-29 largely agree that parental consent, age verification and time limits are good things. Go a little younger and the tune changes:

Age verification appears to be the low-hanging fruit here on the regulatory side, but the questions from Pew, and the status quo, are all about the social media companies themselves implementing and forcing those age restrictions. My guess is that we will see a lot of states adopt such laws and take back anything they can from the tech giants. But therein lies the problem—state-level regulation of such things strike me as the exact wrong way to do it. Sure, the states-as-laboratories-of-democracy idea has its merits, but if we are going to regulate social media, it simply has to be at the national level.

Enter Surgeon General Vivek Murthy and his much-discussed op-ed in the NYT a few weeks ago: Why I’m Calling for a Warning Label on Social Media Platforms.

The idea of warning labels is a good one, but it falls into the “it can’t hurt” category rather than being a real solution. Murthy makes a good case, pointing to bullying, body-shaming, and the general toxicity of online social life for young people. But, as he points out in the piece, it truly does take a village, and no amount of legislation or regulation will change that:

Schools should ensure that classroom learning and social time are phone-free experiences. Parents, too, should create phone-free zones around bedtime, meals and social gatherings to safeguard their kids’ sleep and real-life connections — both of which have direct effects on mental health. And they should wait until after middle school to allow their kids access to social media. This is much easier said than done, which is why parents should work together with other families to establish shared rules, so no parents have to struggle alone or feel guilty when their teens say they are the only one who has to endure limits. And young people can build on teen-focused efforts like the Log Off movement and Wired Human to support one another in reforming their relationship with social media and navigating online environments safely.

To his credit, Murthy is a very thoughtful person who really cares about such things—it’s not just his job. In May 2023, I wrote about rampant, epidemic levels of loneliness in America based on an advisory from his desk, and much of that rings true here. The doom scroll has replaced meaningful interpersonal interactions and we are all worse off for it.

I think all adults and especially every parent out there needs to take a long hard look at what social media has done to us. Beyond the fact that untold numbers of children simply do not live in parenting environments where this sort of interaction is possible, many of those same children certainly turn to social media as a means to escape it.

Since time immemorial, the olds have enacted rules and laws to protect kids from themselves but, with social media, the hypocrisy abounds. Alcohol and cigarettes are the banner vices, and countless lives have been upended due to their over-consumption, but social media strikes me as a different animal. Adults have, frankly, not done well with social media, its ills go beyond lung cancer and alcoholism, and I think any regulation that goes beyond age restrictions should be considered in terms of adults as well.

Social media has played a significant role in so many tragedies perpetrated by adults that I don’t know where to start. News literacy is in the tank, no amount of de-platforming (see: self-regulation) has worked, and local news is on its last collective legs. The very existence of social media has contributed to all of these things—QAnon, the rise of anti-vaxxers, and on and on and on. So, perhaps we should be thinking more about regulations for all age groups, not just kids.

Take New York’s new laws, signed by Governor Kathy Hochul in late June. One, the SAFE for Kids Act, requires parental consent for their children to see algorithmic feeds on their social media accounts. Here’s how the bill’s supporters describe it (via The Verge):

Sponsors of New York’s SAFE for Kids Act wrote that its purpose is to “protect the mental health of children from addictive feeds used by social media platforms, and from disrupted sleep due to night-time use of social media.” In addition to the algorithm restrictions, it would bar platforms from sending notifications to minors between midnight and 6AM without their parent’s consent. The bill instructs the attorney general’s office to lay out appropriate age verification methods and says those can’t solely rely on biometrics or government identification. The law would take effect 180 days after the AG’s rules, and the state could then fine companies $5,000 per violation.

Honestly, most of this sounds like stuff that could be made universal, regardless of age. The other overarching issue for me is that, in regulating such things, we may in fact be hurting kids who are already marginalized in some way and using social media to find friends, communities, and other positive things. As is always the way with such things, there has to be a good-outweighs-the-bad discussion, and in the case of the New York law, I think the good does indeed outweigh the bad. Of course, it will be some time before we see the effects of the law, if there are any, but it’s an interesting idea.

Look at the platforms on this list (especially YouTube!) and imagine them all without algorithmic feeds:

It’s a totally different ballgame if the YouTube Shorts feed and suggested videos are upended. Without its algorithmic feed, TikTok likely doesn’t achieve the heights it is at right now—same for Instagram. Of course, it’s the algorithm that gives TikTok and others their power and where they make their money.

Would our minds and attention spans be in better place if we only saw content from people we actually followed? I’m betting yes.

— Sree

Twitter | Instagram | LinkedIn | YouTube | Threads

Promotional message

Be smarter at cocktail parties with THIS WEEK, THOSE BOOKS

THIS WEEK, THOSE BOOKS: A quick weekly newsletter and five-minute podcast that connect the dots on the week's big news story and the world of books. A literary take on the news from London-based journalist and writer, news junkie and passionate book reader Rashmee Roshan Lall (and my boss a lifetime ago!). Makes you smarter, faster. More than 10,000 readers in 110 countries agree. Sign up here.

DIGIMENTORS TECH TIP | Reolink Argus 4 Pro: Security Cam Sees in the Dark in Full Color

By Robert S. Anthony

Each week, veteran tech journalist Bob Anthony shares a tech tip you don’t want to miss. Follow him @newyorkbob on Twitter and check out his 1.1 million followers on Pinterest!

Things that go bump in the night make for cool fairy tale characters, but, of course, they’re rather serious issues if they’re skulking around your home. Security cameras with night vision aren’t new, but a fresh entry from Reolink promises full, high-resolution views into the dark in full color.

The Reolink Argus 4 Pro security camera can see both visible and infrared light and combine the two into a bright 4K-resolution nighttime video stream without the need for additional lighting thanks to Reolink’s ColorX technology.

The new unit, launched at a recent New York press event, features twin light-gathering F1.0 4mm lenses and internal 1.125-inch sensors which combine their video feeds into a single, seamless 180-degree video stream without blind spots, according to Reolink.

Like most security cameras, video recording starts only when motion is detected but the unit adds artificial intelligences to distinguish between people, animals, vehicles and other moving objects. A free app lets users adjust motion-detection zones and set privacy masks.

The wireless Reolink Argus 4 Pro supports high-speed, dual-band Wi-Fi 6 connectivity, which can handle faster data speeds and more data streams between the router and connected devices than previous versions of Wi-Fi. This allows for ample bandwidth for the unit to stream color, jitter-free 4K color video streams, according to Reolink.

The weatherproof, battery-powered unit can be attached to a building, tree or post and kept charged with a solar panel or used indoors with a standard USB-C phone charger. According to Reolink, just 10 minutes of sunlight on the solar panel keeps the battery charged for 24 hours.

Video from the unit can be monitored with Reolink’s mobile app, which can receive alarms and notifications. The camera also has a built-in speaker and microphone which allows users to communicate with anyone in sight of the unit.

The Argus 4 Pro has a microSD slot for memory cards of up to 128GB in capacity and can be linked with Reolink’s Home Hub, which has two microSD card slots, each of which supports cards of up to 512GB. The Home Hub can handle video streams from up to eight cameras.

TheArgus 4 Pro lists for $199.99 alone or $219.99 with a solar panel while the Reolink Home Hub lists for $99.99.

Did we miss anything? Make a mistake? Do you have an idea for anything we’re up to? Let’s collaborate! sree@sree.net and please connect w/ me: Twitter | Instagram | LinkedIn | YouTube / Threads

Imagine if we re-designed social media to care. #DreamProSocialMedia. https://suno.com/song/a3ea6a96-e1e6-4e28-a53f-f7970d6107bb